December 26, 2012

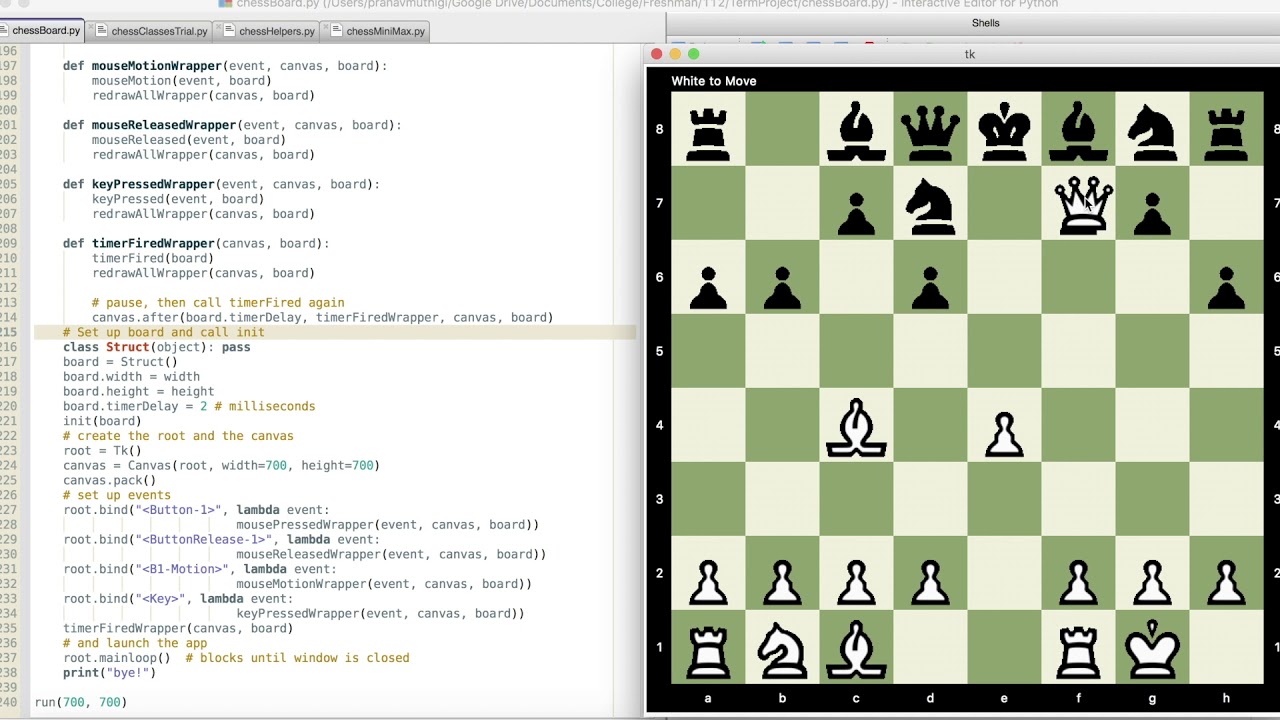

I have always had a lot of respect for chess, despite the fact that I'm not very good at it myself. As I learned more about the game, I also heard about the successes of computer chess AIs, in particular the sensational defeat of Gary Kasparov by Deep Blue. This inspired me to write a primitive chess engine in Python in high school, which played rather abysmally.

Over the summer of 2011, then, I built a new chess engine from scratch in C. This chess engine is good enough to consistently beat me, (faint praise!), and even defeat some of my friends I would call 'advanced amateurs', people with ratings who played on the chess teams of their high schools. My engine even eked out a few victories over the low-end but established engine 'Fairy Max'. Still, the engine is far from good, and there are still some subtle bugs I've yet to squash.

Also, c oding a chess engine in Ruby or Python is a bad idea. Stick to C or C. May the plague get you if you ever think about Java for such a thing. My suggestion would be to check out the general theory behind programs that play games, with particular emphasis on minimax and alpha-beta pruning. Soul ballads free mp3 downloads. Check out Crafty and Stockfish, they are both. PYTHON:Write a program which finds the logarithm of a real number using algorithm. Formula, steps to use, and example input/output included. Steps: Below your program header, enter additional comments in your Python program identifying each part.

In this post I describe how the engine works, together with some of the subproblems I faced and how I solved them (or failed to). After reading this post, you should have a fairly concrete understanding of how my engine works, and have a good idea of how more established engines work too. All the code for the engine is available on github.

- Draw a Chess Board using Graphics Programming in C; Check if any King is unsafe on the Chessboard or not; Number of ways to place two queens on a N.N chess-board; Count of all possible ways to reach a target by a Knight; N-Queen Problem Local Search using Hill climbing with random neighbour.

- So it is no surprise that there are some algorithms that were devised with games in mind. 2 The Min-Max Algorithm The Min-Max algorithm is applied in two player games, such as tic-tac-toe, checkers, chess, go, and so on. All these games have at least one thing in common, they are logic games. This means that they can be described.

- Implements an easy-to-use Stockfish class to integrates the Stockfish chess engine with Python. Install $ pip install stockfish Ubuntu or Debian.

Outline

In outline, my chess engine is very simple: There are essentially two components of the program: evaluation and search. https://hereufil397.weebly.com/file-transer-tool-for-mac.html. The evaluation function takes as input a chess position, and returns an estimate of who is winning and by how much. The search procedure looks through possible moves for both players and finds the best moves.

Evaluation Function

A good evaluation function strikes a balance between estimating the positional advantage accurately, and doing so quickly. Obviously, we want our evaluation function to be accurate, so that the engine makes moves that really do lead to good positions. But the evaluation function also needs to be fast, because the faster it is, the more moves into the future that the engine can look, and consequently the better the engine will play. Mario kart 8 pc emulator mac.

Unfortunately, speed and accuracy are antagonistic. Achieving accuracy requires examining a variety of different features of the position at a high level of detail, which takes more time. For example, the most important component in most evaluation functions, including my own, is the value of the pieces. When I was younger, I was taught the classical piece values: A bishop or knight is worth three pawns, a rook is worth five, and a queen worth nine. This isn't really true, however; the value of a piece also depends on its location on the board and on the rest of the position. A rook on an open file, a 'bad' bishop, or a passed pawn are usually worth more, less, and more than usual, respectively. And these values also differ depending on the opening that was played and the stage of the game.

Only including the classical piece values in your evaluation function is fast and inaccurate. Also including the locations of the pieces on the board is somewhat more accurate and a little slower. Adding on positional adjustments makes the evaluator slower and more accurate, and so on.

My engine's evaluation function is embarrassingly simplistic. As features, it uses which pieces are on the board, some basic pawn structure features, (like the locations of passed and doubled pawns), and some basic board-control features, (namely, for each square, how many pieces of each color are attacking it). The evaluation function is then a linear combination of these features. The weights, I'm embarrassed to say, were all chosen essentially arbitrarily by me; (the pieces, for example, are given their classical values as weights). This is something I'd very much like to improve.

The Naive Search Approach: Minimax

If you've ever played chess, checkers, tic-tac-toe, or any other sequential, deterministic two-player game with complete information, then you probably already have an intuitive idea about how to choose the move you make. The basic idea is this: Suppose you are given a position, and you have enough time to analyze up to a depth of d half-moves made from that position, (where a half-move is a move made by either player). If d = 1, this means that you have enough time only to look at your own moves, but not to look at any of your opponent's responses to them. In this case, you just make the move that leads to the best position evaluation.

If d > 1, however, you can look at all of your opponent's responses to your moves, and perhaps even your responses to your opponents responses, and so on. Your opponent, of course, will try to make the best move for themselves. In fact, they'll be using the same algorithm as you to choose their move; the only difference is that after you've made a move, your opponent can only search up to a depth of d - 1. So the score you give to a position after making one of your initial moves should be the score your opponent gives it after searching up to a depth of d - 1. This is called the minimax algorithm, and a python pseudo-implementation is below:

The Clever Search Approach: Alpha-Beta Pruning

The problem with the naive, minimax approach is that it is incredibly wasteful, as the following example shows: Suppose we are given some position, and after analyzing the first possible move in the position, a pawn move, we see that making it would get us roughly an even position. Now we start examining the second possible move, a bishop move. Our opponent's first response to this second move of ours, we discover, wins the bishop. Obviously, we will never make this bishop move, because we can get an even position with the pawn move we just analyzed, which is much better than losing a bishop. But the minimax algorithm would continue to examine the rest of the opponent's responses to our bishop move, even though we already know that we won't make this move.

A better approach, called alpha-beta pruning, keeps track of the best scores attainable by white and black so far, (respectively, alpha and beta). If it is possible in a given position for white to get a higher score than beta, then black will never allow white to reach this position, and so we can stop evaluating it. The same holds if black can get a lower score than alpha. We initialize alpha and beta to be negative and positive infinity, respectively, since we don't know in advance what the minimax solution will be. It's relatively easy to prove formally that this algorithm will produce the same results as the minimax algorithm. In python, the alpha-beta algorithm is:

Search Details: Move Ordering, Iterative Deepening, and Quiescence

The alpha-beta algorithm depends critically on the order in which we examine the moves. In the example I gave above, for instance, suppose we had looked at the terrible bishop move before the reasonable pawn move. When examining the pawn move, we would have been delighted to discover that we could do better than in the terrible bishop move, but we wouldn't be able to achieve any cutoffs, having already wasted our time analyzing the terrible bishop move completely.

More generally, if the best moves are examined first, one can prove that the alpha-beta algorithm examines about the square root of the number of positions as the minimax algorithm. This is a huge deal: If at every position we can make m possible moves, then the minimax algorithm examines m^d terminal positions, but in the best case the alpha-beta algorithm only looks at about m^(d/2) terminal positions. This means that best-case alpha-beta can search twice as deep as minimax at the same cost! If the moves are examined in the worst possible order, however, then the alpha-beta algorithm will examine as many terminal nodes as the minimax algorithm.

Thus, a great deal of effort should go into determining which moves to look at first. My engine, unfortunately and embarrassingly, does not do this very well at all. The main heuristic my engine uses is sorting the moves based on the piece that is moving and the piece it is capturing, (or none, if the move is not a capture), with parameter values favoring captures of valuable pieces by less valuable pieces. The parameter values used, once again, were arbitrarily chosen by me. This state of affairs is frankly embarrassing, and I'd very much like to improve on it.

So far, we have assumed that we were searching up to some fixed depth d. In practice, however, we don't know in advance how long the searches will take. So instead, we first search to a depth of 1, and then a depth of 2, and so on, until we exceed the allotted search time. This is called iterative deepening. Iterative deepening might seem wasteful, since we only care about the results of the deepest search performed, but it's actually not as bad as you might think:

Firstly, search time grows exponentially with the depth searched, (and with a large growth rate), so that the deepest search accounts for the majority of the total search time. Secondly, at each depth we store the best move in a transposition table, and in deeper searches we examine that move first, improving the move-ordering of the more expensive searches. And finally, we use the same transposition table throughout the entire game. Consequently, suppose we search up to a depth of d, and make some move: If our opponent responds with a move we expected, then we will have already examined the resultant position when calculating our previous move, at a depth of d - 2. Since we stored the results in the transposition table, we can skip the shallow searches in iterative deepening and start examining the resultant position at depth d - 1.

Another issue to consider in search is the problems resulting from searching to any fixed depth. Stalker call of chernobyl console commands. The most significant problem is that the very next move might well do serious damage. For example, suppose that the move leading to the terminal position in a fixed-depth search is a queen move. We evaluate the position with our evaluation function, and, as far as far as we know, it's a great move. The problem is, however, that we may have just moved our queen right into a square attacked by our opponent's pawn, so on the following move he would win our queen.

The fundamental issue here is that the evaluation function should only evaluate positions that are 'quiet', that is, positions without possible tactics. If we search to a fixed depth, then we may end up using the evaluation function on positions that are in the middle of a tactical exchange, leading to the horizon-effect problem I illustrated earlier. Lotus 25 replica. To prevent this problem from occurring, we use what's called a quiescence search at the terminal position: After reaching the end of the alpha-beta search, instead of just taking the position evaluation of the terminal position, we also examine all possible captures and checks, moves that 'dequiet' the position. We then allow a player to select the better of the position evaluation and the (recursive) quiescence scores of the positions following the dequiet moves. This ensures that position evaluations are used only if possible captures sequences lead to worse positions.

Implementation

After understanding how the chess engine works at the algorithmic level, let's turn to the implementation. The word 'implementation' often carries connotations of being straightforward and uninteresting, but I want to emphasize that in fact implementing the algorithms I've talked about above produces a variety of fascinating subproblems, some of which have very, very clever solutions.

Python Chess Tutorial

Data Structures for Chess Positions

The most important implementation decision is determining how to represent a chess position in memory. For simplicity, let's ignore the complications introduced by castling rights, en passant rights, the threefold repetition rule, and keeping track of whose move it is, and let's just consider a 'chess position' to only be where each of the pieces are on the board.

Probably the most obvious way of representing a chess position is with an 8x8 array, with the value at position (i, j) indicating which piece (if any) is at row i and column j. This is the data structure my high school code uses, with a python list of lists. Another slightly less obvious, but still straightforward way to represent a position in memory is to instead have a list of the locations of each piece, with some special 'location' for if the piece has been captured.

These two data structures are opposites, in a way: In the former data structure, the 8x8 array, it's very easy to determine what piece, if any, is on a particular square, (as this is just a single lookup), but hard to determine where a particular kind of piece is, (since this sometimes requires checking every square). In the latter data structure, the list of piece positions, it's very easy to determine where a particular piece is, but hard to determine what piece, if any, is on a particular square. The list of piece positions also has the disadvantage that implementing pawn promotion becomes awkward, since pawn promotions may, for example, cause there to be more than one queen or more than two knights of a particular color.

Yet another option, of course, is to use both of these data structures. This would allow you to use the advantages of both formats, at the cost of writing more memory (and code!), and having to implement pawn promotion in the list of positions data structure. I didn't try this, however, because before I did I read about a completely different, extremely clever data structure: the bitboard.

The bitboard data structure is one of my favorite hacks I've ever seen. It's all based on the simple observation that chess boards have 64 squares, and modern architectures use 64 bit words. If we enumerate the squares of the board from 0 to 63, we can therefore use a 64 bit word to represent which squares have some piece on them. My engine uses 12 of these bitboards: one each for pawns, knights, bishops, rooks, queens, and kings of each color. In addition, I also keep 3 redundant bitboards: one extra bitboard representing all white pieces, another extra representing all black pieces, and a final extra bitboard representing the position of all pieces.

This data structure attains most of the advantages of both the 8x8 array and the list of positions data structures. Determining where all pieces of a particular type are is very easy--just look up the relevant bitboard. It's also easy to determine if a square is occupied--just bitwise AND the bitboard representing all pieces with the relevant bitmask, and check whether the result is nonzero. (It's a little bit harder, however, to determine which piece occupies a given square). But another advantage the bitboard struct has over the previous two data structures is that all the functions operating on a chess position can use very fast bit-level operations.

For example, consider the problem of determining where white pawns may advance. To compute this, we simply bitshift the white pawn bitboard, moving all pawns forward together, and AND the result with the negation of the bitboard representing all the pieces.

Hashing Position Evaluations

The Naive Search Approach: Minimax

If you've ever played chess, checkers, tic-tac-toe, or any other sequential, deterministic two-player game with complete information, then you probably already have an intuitive idea about how to choose the move you make. The basic idea is this: Suppose you are given a position, and you have enough time to analyze up to a depth of d half-moves made from that position, (where a half-move is a move made by either player). If d = 1, this means that you have enough time only to look at your own moves, but not to look at any of your opponent's responses to them. In this case, you just make the move that leads to the best position evaluation.

If d > 1, however, you can look at all of your opponent's responses to your moves, and perhaps even your responses to your opponents responses, and so on. Your opponent, of course, will try to make the best move for themselves. In fact, they'll be using the same algorithm as you to choose their move; the only difference is that after you've made a move, your opponent can only search up to a depth of d - 1. So the score you give to a position after making one of your initial moves should be the score your opponent gives it after searching up to a depth of d - 1. This is called the minimax algorithm, and a python pseudo-implementation is below:

The Clever Search Approach: Alpha-Beta Pruning

The problem with the naive, minimax approach is that it is incredibly wasteful, as the following example shows: Suppose we are given some position, and after analyzing the first possible move in the position, a pawn move, we see that making it would get us roughly an even position. Now we start examining the second possible move, a bishop move. Our opponent's first response to this second move of ours, we discover, wins the bishop. Obviously, we will never make this bishop move, because we can get an even position with the pawn move we just analyzed, which is much better than losing a bishop. But the minimax algorithm would continue to examine the rest of the opponent's responses to our bishop move, even though we already know that we won't make this move.

A better approach, called alpha-beta pruning, keeps track of the best scores attainable by white and black so far, (respectively, alpha and beta). If it is possible in a given position for white to get a higher score than beta, then black will never allow white to reach this position, and so we can stop evaluating it. The same holds if black can get a lower score than alpha. We initialize alpha and beta to be negative and positive infinity, respectively, since we don't know in advance what the minimax solution will be. It's relatively easy to prove formally that this algorithm will produce the same results as the minimax algorithm. In python, the alpha-beta algorithm is:

Search Details: Move Ordering, Iterative Deepening, and Quiescence

The alpha-beta algorithm depends critically on the order in which we examine the moves. In the example I gave above, for instance, suppose we had looked at the terrible bishop move before the reasonable pawn move. When examining the pawn move, we would have been delighted to discover that we could do better than in the terrible bishop move, but we wouldn't be able to achieve any cutoffs, having already wasted our time analyzing the terrible bishop move completely.

More generally, if the best moves are examined first, one can prove that the alpha-beta algorithm examines about the square root of the number of positions as the minimax algorithm. This is a huge deal: If at every position we can make m possible moves, then the minimax algorithm examines m^d terminal positions, but in the best case the alpha-beta algorithm only looks at about m^(d/2) terminal positions. This means that best-case alpha-beta can search twice as deep as minimax at the same cost! If the moves are examined in the worst possible order, however, then the alpha-beta algorithm will examine as many terminal nodes as the minimax algorithm.

Thus, a great deal of effort should go into determining which moves to look at first. My engine, unfortunately and embarrassingly, does not do this very well at all. The main heuristic my engine uses is sorting the moves based on the piece that is moving and the piece it is capturing, (or none, if the move is not a capture), with parameter values favoring captures of valuable pieces by less valuable pieces. The parameter values used, once again, were arbitrarily chosen by me. This state of affairs is frankly embarrassing, and I'd very much like to improve on it.

So far, we have assumed that we were searching up to some fixed depth d. In practice, however, we don't know in advance how long the searches will take. So instead, we first search to a depth of 1, and then a depth of 2, and so on, until we exceed the allotted search time. This is called iterative deepening. Iterative deepening might seem wasteful, since we only care about the results of the deepest search performed, but it's actually not as bad as you might think:

Firstly, search time grows exponentially with the depth searched, (and with a large growth rate), so that the deepest search accounts for the majority of the total search time. Secondly, at each depth we store the best move in a transposition table, and in deeper searches we examine that move first, improving the move-ordering of the more expensive searches. And finally, we use the same transposition table throughout the entire game. Consequently, suppose we search up to a depth of d, and make some move: If our opponent responds with a move we expected, then we will have already examined the resultant position when calculating our previous move, at a depth of d - 2. Since we stored the results in the transposition table, we can skip the shallow searches in iterative deepening and start examining the resultant position at depth d - 1.

Another issue to consider in search is the problems resulting from searching to any fixed depth. Stalker call of chernobyl console commands. The most significant problem is that the very next move might well do serious damage. For example, suppose that the move leading to the terminal position in a fixed-depth search is a queen move. We evaluate the position with our evaluation function, and, as far as far as we know, it's a great move. The problem is, however, that we may have just moved our queen right into a square attacked by our opponent's pawn, so on the following move he would win our queen.

The fundamental issue here is that the evaluation function should only evaluate positions that are 'quiet', that is, positions without possible tactics. If we search to a fixed depth, then we may end up using the evaluation function on positions that are in the middle of a tactical exchange, leading to the horizon-effect problem I illustrated earlier. Lotus 25 replica. To prevent this problem from occurring, we use what's called a quiescence search at the terminal position: After reaching the end of the alpha-beta search, instead of just taking the position evaluation of the terminal position, we also examine all possible captures and checks, moves that 'dequiet' the position. We then allow a player to select the better of the position evaluation and the (recursive) quiescence scores of the positions following the dequiet moves. This ensures that position evaluations are used only if possible captures sequences lead to worse positions.

Implementation

After understanding how the chess engine works at the algorithmic level, let's turn to the implementation. The word 'implementation' often carries connotations of being straightforward and uninteresting, but I want to emphasize that in fact implementing the algorithms I've talked about above produces a variety of fascinating subproblems, some of which have very, very clever solutions.

Python Chess Tutorial

Data Structures for Chess Positions

The most important implementation decision is determining how to represent a chess position in memory. For simplicity, let's ignore the complications introduced by castling rights, en passant rights, the threefold repetition rule, and keeping track of whose move it is, and let's just consider a 'chess position' to only be where each of the pieces are on the board.

Probably the most obvious way of representing a chess position is with an 8x8 array, with the value at position (i, j) indicating which piece (if any) is at row i and column j. This is the data structure my high school code uses, with a python list of lists. Another slightly less obvious, but still straightforward way to represent a position in memory is to instead have a list of the locations of each piece, with some special 'location' for if the piece has been captured.

These two data structures are opposites, in a way: In the former data structure, the 8x8 array, it's very easy to determine what piece, if any, is on a particular square, (as this is just a single lookup), but hard to determine where a particular kind of piece is, (since this sometimes requires checking every square). In the latter data structure, the list of piece positions, it's very easy to determine where a particular piece is, but hard to determine what piece, if any, is on a particular square. The list of piece positions also has the disadvantage that implementing pawn promotion becomes awkward, since pawn promotions may, for example, cause there to be more than one queen or more than two knights of a particular color.

Yet another option, of course, is to use both of these data structures. This would allow you to use the advantages of both formats, at the cost of writing more memory (and code!), and having to implement pawn promotion in the list of positions data structure. I didn't try this, however, because before I did I read about a completely different, extremely clever data structure: the bitboard.

The bitboard data structure is one of my favorite hacks I've ever seen. It's all based on the simple observation that chess boards have 64 squares, and modern architectures use 64 bit words. If we enumerate the squares of the board from 0 to 63, we can therefore use a 64 bit word to represent which squares have some piece on them. My engine uses 12 of these bitboards: one each for pawns, knights, bishops, rooks, queens, and kings of each color. In addition, I also keep 3 redundant bitboards: one extra bitboard representing all white pieces, another extra representing all black pieces, and a final extra bitboard representing the position of all pieces.

This data structure attains most of the advantages of both the 8x8 array and the list of positions data structures. Determining where all pieces of a particular type are is very easy--just look up the relevant bitboard. It's also easy to determine if a square is occupied--just bitwise AND the bitboard representing all pieces with the relevant bitmask, and check whether the result is nonzero. (It's a little bit harder, however, to determine which piece occupies a given square). But another advantage the bitboard struct has over the previous two data structures is that all the functions operating on a chess position can use very fast bit-level operations.

For example, consider the problem of determining where white pawns may advance. To compute this, we simply bitshift the white pawn bitboard, moving all pawns forward together, and AND the result with the negation of the bitboard representing all the pieces.

Hashing Position Evaluations

Another interesting implementation problem involves creating a hash function for chess positions. This hash function should take as input a chess position, and return as output an integer that can be used for lookups in a transposition table. As you'll recall, my engine uses these transposition tables to keep track of positions it has evaluated before, so that it doesn't evaluate the same position twice and so that it can use the information from previous searches to guide its future searches.

The hash function I use is very simple: Before compiling the code, I generate 64*12 = 768 random 64-bit integers, one for each type of piece on each square. For a given position, the hash function is the bitwise XOR of all the random integers that correspond to pieces on particular squares of the board. (I also have a few extra random integers for things like castling rights, side-on-move, and en-passant). This hash function is also very easy to compute: although computing it from scratch would require finding all the proper pieces and XORing all the relevant integers, at runtime the hash function is always computed from the hash of the previous position. If, for example, a white rook moves from h1 to h4, capturing a black pawn, to adjust the hash we simply XOR the original hash with the 'white rook h1', 'white rook h4', and 'black pawn h4', (plus extra adjustments for side-on-move and for castling rights, if necessary).

Chess Source Code Python

This hash function is also extremely easy to analyze theoretically: For any two positions, we can transform the hash of one into the hash of the other by XORing out all the (piece, location) pairs of the first that don't appear in the second, and XORing in all the (piece, location) pairs of the second that don't appear in the first. The procedure above shows that the hash of the second board can be created by XORing n random, independent integers with the hash of the first board. If the two boards are different, then obviously n is greater than zero. Recalling that XOR is just addition mod two, we see that the two hashes will be equal if and only if XORing the n iid integers yields zero. Now, a simple exercise in induction or the properties of binomial coefficients shows that the number of heads in n coin flips is even with probability 1/2, regardless of n. Since the 64 bits in the n random integers are independently 1 with probability 1/2, the probability that the n random integers XOR to zero is 1/2^64, still regardless of n. Thus, the probability that two different positions have the same hash is exactly 1/2^64.